Introduction to Twitter sentiment analysis technology

Sentiment analysis is a challenging problem in natural language processing (NLP), text analysis, and computational linguistics. In a general sense, sentiment analysis focuses on analyzing users' opinions about various objects or issues. It was initially analyzed using long texts (e.g., letters, emails, etc.). With the development of the Internet, users gradually use social media for various interactions (sharing, commenting, recommending, making friends, etc.), thus generating a large amount of data that contains a large amount of information and reflects the intrinsic behavioral patterns of users. The huge amount of data requires the use of automated techniques for mining and analysis.

Most sentiment analysis studies use machine learning methods. In the field of sentiment analysis, texts can be classified into positive or negative classes, or multiple categories, i.e., positive, negative, and neutral (or irrelevant). Sentiment analysis techniques for Twitter content can be classified as: lexical analysis, machine learning based analysis, and hybrid analysis.

1. Lexical analysis:

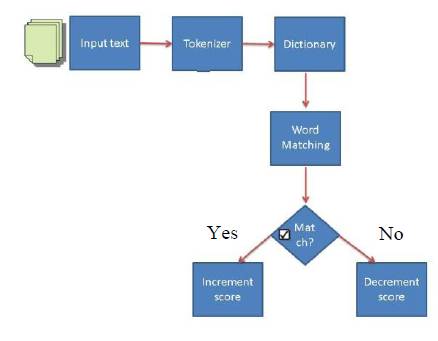

This technique mainly uses a dictionary consisting of pre-tagged words. The input text is converted into individual words by a lexical analyzer. Each new word is matched against the words in the dictionary. If there is a positive match, the score is added to the total pool of scores for the input text. For example, if "dramatic" is a positive match in the dictionary, then the total score for the text is incremented. Conversely, if there is a negative match, the total score of the input text decreases. Although this technique feels somewhat amateurish in nature, it has proven to be valuable. The way the lexical analysis technique works is illustrated below.

The classification of a text depends on the total score of the text. There is a large body of work devoted to measuring the validity of lexical information. For individual phrases, a roughly 80% accuracy can be achieved by manually tagging words (containing only adjectives), which is determined by the subjective nature of the evaluated text. In addition to the manual method of marking words, there are researchers who use Internet search engines to mark the polarity of words. They used two AltaVista search engines for their queries: target word + "good" and target word + "bad", and the final score was based on the number of search results, and the accuracy rate increased from 62% to 65%. Later, other researchers used WordNet database, they calculated the minimum path distance between the target word and "good" and "bad" in WordNet pyramid, and converted the MPD to score value. The MPD is converted into fractional values and stored in the lexical dictionary. The accuracy rate of this method can reach 64%. Other researchers evaluated the semantic gap by simply removing positive words from the set of negative words and obtained an accuracy of 82%. Lexical analysis also has a shortcoming: its accuracy decreases rapidly as the number of dictionary words increases.

2. Machine learning-based analysis:

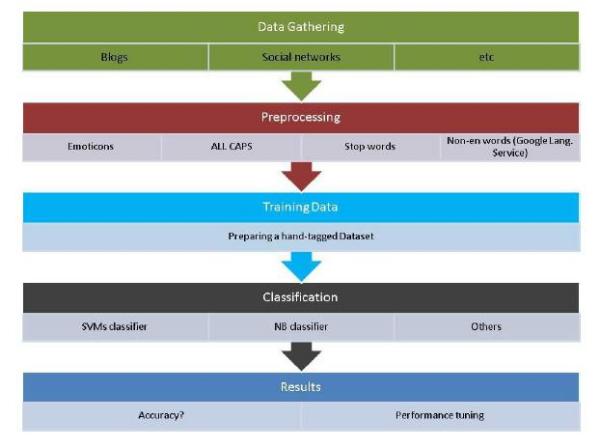

Machine learning techniques have received increasing attention due to their high adaptability and accuracy. In sentiment analysis, supervised learning methods are mainly used. It can be divided into three phases: data collection, preprocessing, and training for classification.

In the training process, a corpus of markers is required to be provided as training data. The classifier uses a series of feature vectors to classify the target data. In machine learning techniques, the key to determining the accuracy of a classifier is the appropriate feature selection. Typically, unigram (a single phrase), bigrams (two consecutive phrases), and trigrams (three consecutive phrases) can all be selected as feature vectors. Of course there are other features such as number of positive words, number of negative words, length of the document, Support Vector Machine (SVM), and Naive Bayes (NB). Depending on the combination of the various features chosen, the accuracy can reach from 63% to 80%. The figure below shows the main steps involved in machine learning based analysis.

At the same time, machine learning techniques face many challenges: the design of the classifier, the acquisition of data for training, and the correct interpretation of some unseen phrases. Compared to lexical analysis methods, it still works well when the number of dictionary words is growing exponentially.

3. Hybrid analysis:

Advances in the study of sentiment analysis have attracted a large number of researchers to explore the possibility of combining the two methods, exploiting both the high accuracy of machine learning methods and the fast features of lexical analysis methods. Some researchers have used words consisting of two words and an unlabeled data to classify these words consisting of two words into positive and negative classes. Some pseudo-documents are generated using all the words in the selected set of words. Then the cosine similarity between the pseudo-document and the untagged document is calculated. Based on the similarity measure, the document is classified as positive or negative sentiment. These training datasets are then fed into a Naive Bayes classifier for training.

Some researchers have proposed a unified framework using background lexical information as word class associations and designed a polynomial Naive Bayes that incorporates manually labeled data in the training. They claim that the performance is improved after exploiting lexical knowledge.

銆怤etwork Security銆戔棌9 popular malicious Chrome extensions

銆怬pen Source Intelligence銆戔棌5 Hacking Forums Accessible by Web Browsers

【Web Intelligence Monitoring】●Advantages of open source intelligence

【News】●AI-generated fake image of Pentagon explosion goes viral on Twitter

銆怤ews銆戔棌Access control giant hit by ransom attack, NATO, Alibaba, Thales and others affected

【Artificial Intelligence】●Advanced tips for using ChatGPT-4