Modern sentiment analysis methods

Sentiment analysis (SA) is a common application of natural language processing (NLP) methods, especially classification for the purpose of refining the emotional content of text. Using methods like sentiment analysis, qualitative data can be analyzed quantitatively through sentiment scores. Although sentiment is fraught with subjectivity, quantitative sentiment analysis already has many useful functions, for example, for companies to understand how users react to products or to discern hate speech in online reviews.

The simplest form of sentiment analysis is to use a dictionary containing both positive and negative words. Each word is assigned a sentiment score, usually +1 for positive sentiment and -1 for negative. Then, we simply add up the sentiment scores of all the words in the sentence to calculate the final total score. Obviously, this approach has many flaws, the most important of which is that it ignores context and neighboring words. For example, a simple phrase "not good" has a final sentiment score of 0, because "not" is -1 and "good" is +1. A normal person would classify this phrase as a negative emotion, despite the presence of "good".

Another common practice is to model a "bag of words" in terms of text. We consider each text as a vector of 1 to N, where N is the size of all vocabulary. Each column is a word, and the corresponding value is the number of occurrences of the word. For example, the phrase "bag of bag of words" can be coded as [2, 2, 1]. This value can be used as input to machine learning algorithms such as logistic regression and support vector machines (SVM) to perform classification. This allows for sentiment prediction on unknown (unseen) data. Note that this requires data with known sentiment to be trained by supervised fashion.

Although it is a significant improvement over the previous approach, it still ignores context and the size of the data increases with the size of the vocabulary.

Word2Vec and Doc2Vec

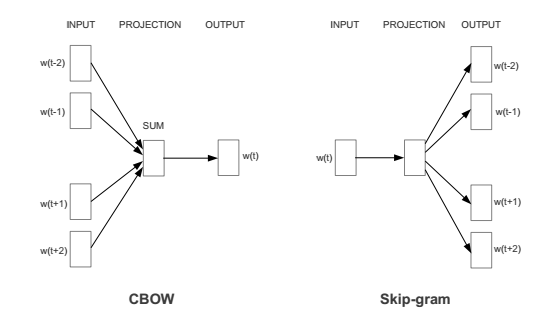

In recent years, Google has developed a new method called Word2Vec to capture the context of words while reducing the size of the data. Word2Vec actually has two different approaches: CBOW (Continuous Bag of Words) and Skip-gram.

For CBOW, the goal is to predict individual words given their neighbors, while Skip-gram is the opposite: we want to predict a range of words given a single word (see below). Both methods use Artificial Neural Networks as their classification algorithm. First, each word in the vocabulary is a random N-dimensional vector. During training, the algorithm uses CBOW or Skip-gram to learn the optimal vector for each word.

These word vectors can now take into account the contextual background. This can be seen as mining word relationships using basic algebraic equations (e.g., "king" - "man" + "woman" = "queen"). These word vectors can be used as input to a classification algorithm to predict sentiment, distinct from the bag-of-words model approach. This has the advantage that we can relate the words to their context and that our feature space has a very low dimensionality (typically about 300, relative to a vocabulary of about 100,000 words). After the neural network has extracted these features, we must also create a small number of features manually. Due to the varying length of the text, the average value of the entire word vector is used as input to the classification algorithm to categorize the entire document.

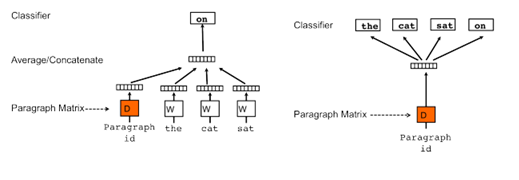

Quoc Le and Tomas Mikolov proposed the Doc2Vec approach to characterize text of varying lengths. This approach is basically the same as Word2Vec except that the paragraph / document vector is added to the original one. Two approaches also exist: DM (Distributed Memory) and DBOW (Distributed Bag of Words), which attempts to predict individual words given the words and paragraph vectors of the preceding part.

DBOW uses paragraph to predict a random set of words in a paragraph (see below). Once trained, the paragraph vector can be used as input to the sentiment classifier without all words.

【Web Intelligence Monitoring】●Advantages of open source intelligence

銆怰esources銆戔棌The Achilles heel of AI startups: no shortage of money, but a lack of training data

銆怰esources銆戔棌The 27 most popular AI Tools in 2023

【Dark Web】●5 Awesome Dark Web Links

【Artificial Intelligence】●Advanced tips for using ChatGPT-4

銆怬pen Source Intelligence銆戔棌5 Hacking Forums Accessible by Web Browsers