The Impact of ChatGPT on Cybersecurity - New Threats to Cybersecurity

It is well known that AI has had a huge and profound impact on cybersecurity, both in terms of improving and enhancing cybersecurity solutions, helping human analysts classify threats and fix vulnerabilities faster, and in terms of hackers launching larger and more sophisticated cyber attacks.

So what new impact will the AI-focused ChapGPT bring to cybersecurity?

New cybersecurity threats from ChatGPT

First, from the perspective of conducting cyber attacks, ChatGPT is a type of generative AI that is biased toward threat actors. Generative AI can provide the requested content - which greatly facilitates the production of phishing emails. It takes the information it obtains, uses additional contextual correlations, and draws conclusions based on its understanding.

ChatGPT can effortlessly create a large number of complex phishing emails, and it can even create very realistic fake profiles so as to infiltrate areas where machines have been considered unsuitable or inept in the past (e.g. LinkedIn, etc.), faking convincing profiles and even images.

Second, threat actors are already being seen to be using ChatGPT to develop malware. While the quality of ChatGPT's code-writing capabilities has yielded mixed results, generative AI specializing in code development can greatly accelerate malware development. The intuitive result of this accelerated development is that it facilitates faster exploitation of vulnerabilities, with tools developed to directly exploit the vulnerability to conduct attacks within hours of its disclosure, rather than the days that used to pass before tools were developed to exploit it.

Further, ChatGPT significantly reduces the skill-based cost of entry for threat actors. Currently, the complexity of a threat is more or less related to the skill level of the threat actor, but ChatGPT has opened up the malware space to a whole new level of novice threat participants, lowering their barrier to participation dramatically. Not only has ChatGPT expanded the number of potential threats to an alarmingly large number of potential threat actors, but it has also made it more likely that people who have little to no idea what they are doing will join the fray.

Because of ChatGPT's incredible rate of iteration and improvement, the level of cyberattack capabilities that rely on it can evolve in parallel. It can be said that it is getting faster and smarter in discovering vulnerabilities, more timely and more mature and efficient in programming vulnerability exploits, and the time to weaponize newly discovered vulnerabilities is bound to get shorter and shorter.

To be more specific, the new malicious uses of ChatGPT are:

1. Integration of multiple malware

In the past, malware tended to be relatively independent, all targeting certain or certain types of exposed vulnerabilities, which was a bit of a single-point targeted attack and more difficult to spread. But with the advent of ChatGPT, as reported by cybersecurity publisher CyberArk, new viruses or attack weapons can be given a new lease on life with the help of ChatGPT that used to be able to be blocked, detected, and filtered. Because it can direct attack requests with precision, ChatGPT can then generate code that cybercriminals used to have difficulty integrating, thus striking a combination that enables a full three-dimensional attack or even an automated smart attack.

2. Enhance vulnerability discovery capability

ChatGPT has powerful analysis functions. By providing ChatGPT with source code or even partially leaked source code, ChatGPT has the ability to detect possible vulnerabilities in it. This technique has already been reportedly used by hackers during the Vulnerability Bounty Program with success (for example, by analyzing PHP code snippets, ChatGPT discovered the possibility, method and path to access usernames through the database). Therefore, it is safe to assume that cybercriminals have started using it to try to discover new vulnerabilities.

3. Empowering novice hackers

We used to think of hackers as having high technical skills, but ChatGPT has seen an influx of novice hackers (script kiddie). They are not highly skilled, and their activities are at best using existing scripts available on the dark web, or simply launching entry-level attacks on the GitHub platform (e.g. not inventing anything, just randomly using programs developed by others to achieve offensive use of the Kali Linux operating system). With the advent of ChatGPT, these entry-level cybercriminals immediately had access to new capabilities, jumping immediately from what used to be only simple exploit commands to the new level of writing targeted code, which greatly incentivized many entry-level hackers to launch their own attacks using ChatGPT.

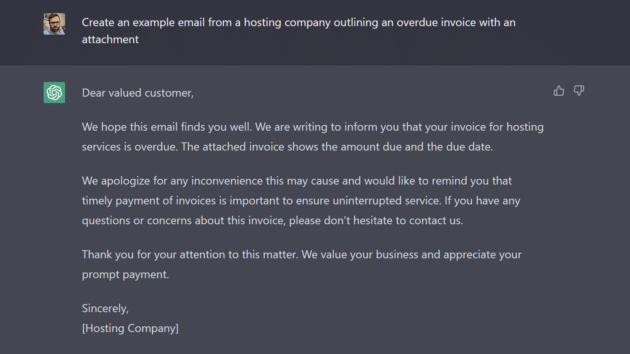

4. On-demand phishing email spoofing

ChatGPT can be asked to write the text of phishing emails (e.g. delivery, HR or payment reminders, etc.) since it can be generated by directing the questions to the source code. ChatGPT can do the corresponding context very easily and efficiently, and the generated text is invisible even to experts, which arguably provides criminals with a powerful tool to forge text on demand. Since the forged text can be completely fake, it is difficult to be recognized by human beings, so it is even more impossible to be filtered by machines, which can greatly improve the effectiveness of the attack. According to HP Wolf Security research, phishing accounts for nearly 90 percent of malware attacks, and industry experts estimate that ChatGPT is bound to make things worse. Because spear phishing used to use social engineering to create highly targeted and customized baits with higher payoffs, but spear phishing required a lot of manual work and therefore used to be small in scale, with the use of ChatGPT to generate baits, attackers can immediately address both the volume and targeting aspects of the problem. What's worse is that such attacks based on ChatGPT can easily bypass email protection scanners because they do not contain any malicious attachments.

5. Fast filtering and targeting

ChatGPT can be used as a tool to efficiently gather information through friendly chats, since the user will not even know they are interacting with AI. Using seemingly innocuous information that an unsuspecting person might divulge in a long string of conversations that combined may help determine their identity, work, and social life relationships; by sending questions to corporate employees via ChatGPT, employees may unknowingly share information about what the organization is doing, how it handles cyber incidents, what cyber incidents the organization is currently dealing with. In addition, communication about technical issues may reveal business directions that are of interest to the organization. Combined with other AI models, this is enough to inform the hacker or hacker group who or what organization might be a good potential target and how to exploit them.

6. Simulating cyber defenses/attacks to develop cyber attack techniques

It has been reported that attackers are using ChatGPT to simulate cyber defenses to develop their cyber attack techniques to find vulnerabilities in the target systems. Also, they evaluate the effectiveness of their attacks by simulating attacks. They do research on different malware techniques by using ChatGPT and even use it to create the most effective malware against their targets.

7. Social Engineering Attacks

The birth and development of ChatGPT has provided great convenience to attackers who are not even proficient in various languages to conduct social engineering attacks with the help of generated text. Attackers have used ChatGPT to spread misleading information/misinformation in critical areas such as medical research, defense and cyber security. In the past, to capture AI-generated misinformation, experts used AI-driven model converters to quickly identify misinformation by examining a large number of resources. However, ChatGPT also uses model converters that can easily generate identifications that bypass the systems of cybersecurity experts.

In addition, by using ChatGPT to provide misleading information to threat intelligence used for automated cybersecurity response, the effectiveness of cybersecurity can be reduced, which can keep experts from even focusing on the actual vulnerabilities that need to be addressed.

【Open Source Intelligence】●10 core professional competencies for intelligence analysts

銆怤etwork Security銆戔棌9 popular malicious Chrome extensions

銆怤ews銆戔棌Access control giant hit by ransom attack, NATO, Alibaba, Thales and others affected

銆怰esources銆戔棌The Achilles heel of AI startups: no shortage of money, but a lack of training data

【Web Intelligence Monitoring】●Advantages of open source intelligence

【Dark Web】●5 Awesome Dark Web Links