Navigating Data Scraping: Techniques, Applications, and Security Measures

In today's digital age, the ability to extract and utilize data from the web is a powerful asset for businesses and researchers alike. Data scraping, also known as web scraping, lies at the heart of this capability, enabling the extraction of valuable information from websites for a multitude of purposes. From enhancing market research to optimizing operational efficiencies, the applications of data scraping are diverse and far-reaching.

Understanding Data Scraping

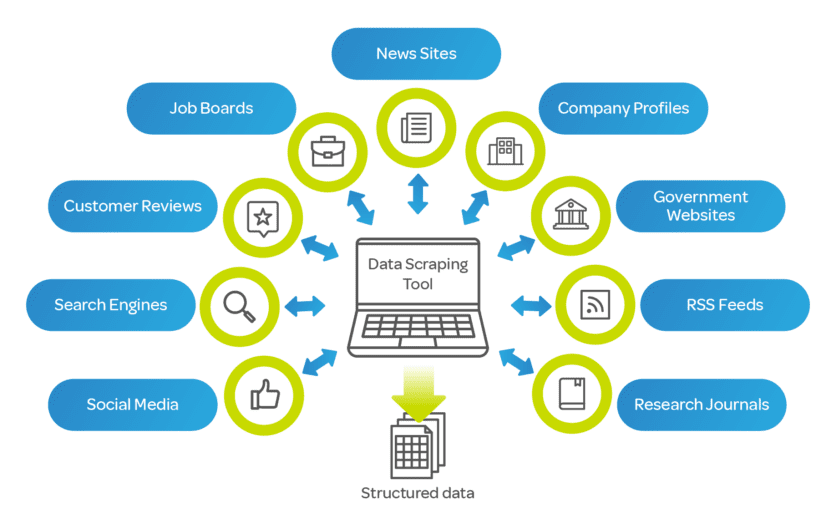

Data scraping, also known as web scraping, is a technique where a computer program extracts data from another program's output, often from websites. This process involves importing data from websites into files or spreadsheets for personal or commercial use. Data scraping is widely utilized for its efficiency in gathering information from the web and transferring it to another platform.

Data scraping has several practical applications, including:

Business Intelligence: Collecting data to inform web content and strategic decisions.

Pricing Analysis: Gathering pricing information for travel booking or comparison sites.

Market Research: Finding sales leads and conducting research through public data sources like social media platforms and directories.

E-commerce Integration: Transferring product data from e-commerce sites to online shopping platforms like Google Shopping.

While data scraping can be used legitimately to enhance business operations, it also has the potential for misuse. For instance, scraping can be employed to harvest email addresses for spamming or to copy copyrighted content for unauthorized publication. Due to such potential for abuse, some countries have regulations against automated email harvesting for commercial purposes, considering it an unethical practice.

3 Main Types of Data Scraping

Report mining: Programs pull data from websites into user-generated reports. It's a bit like printing a page, but the printer is the user's report.

Screen scraping: The tool pulls information on legacy machines into modern versions.

Web scraping: Tools pull data from websites into reports users can customize.

How Does Data Scraping Work?

Interested in extracting data from a trusted source? Here's how you can dive right in using specialized tools designed for the task.

Web scrapers, in essence, follow a straightforward three-step process:

Request: Initiates a "GET" command to fetch data from a specified webpage.

Parse: Identifies and extracts the precise data fields you've targeted.

Display: Organizes the extracted information into a customizable report or format of your choice.

While these tools may seem complex to develop, they're surprisingly accessible for everyday users. Here are three user-friendly data scraping tools perfect for exploration:

Data Scraper: A Chrome extension that effortlessly captures data from any visited webpage, allowing you to specify the format without any coding required.

Data Miner: Available as extensions for Chrome and Microsoft Edge, this tool scrapes data directly into CSV files, ideal for easy manipulation in Excel or other spreadsheet applications.

Data Scraping Crawler: Designed for extracting specific data like phone numbers, email addresses, or social media profiles, this tool conveniently exports data to Excel and can be set to update fields automatically.

These tools empower users to gather and analyze data effectively, whether for personal projects, business insights, or research purposes. With their intuitive interfaces and robust functionalities, experimenting with data scraping has never been more accessible.

The Dynamics of Data Scraping

Data scraping, or web scraping, involves extracting data from websites using automated scripts known as scraper bots. This practice plays a pivotal role in various sectors, though it also sparks a continuous challenge between scrapers and content protection measures.

The process of web scraping typically unfolds in several steps:

HTTP Request: A scraper bot initiates an HTTP GET request to a specific website to retrieve its content.

HTML Parsing: Upon receiving the website's response, the scraper parses the HTML document to locate and extract desired data patterns.

Data Conversion: Extracted data is then converted into a structured format tailored to the scraper bot's requirements.

Scraper bots serve diverse purposes, including:

Content Scraping: Replicating valuable content from websites to mimic unique advantages, such as product reviews or service listings, which can be misused for competitive purposes.

Price Scraping: Aggregating pricing data to gain insights into competitors' strategies and market positioning.

Contact Scraping: Extracting contact details like email addresses and phone numbers from websites, often for bulk mailing lists or malicious uses in social engineering.

While data scraping facilitates legitimate activities such as market research and business intelligence, it also poses significant cybersecurity challenges. Websites may unknowingly expose sensitive data to scrapers, leading to potential misuse or exploitation by malicious actors.

For instance, scraped data can be leveraged in:

Phishing Attacks: Tailoring phishing attempts by using scraped information to personalize malicious emails, targeting specific individuals or organizations.

Password Cracking: Exploiting publicly available data to guess passwords or security answers, enhancing the effectiveness of credential cracking attempts.

Techniques in Data Scraping

Several techniques are employed in data scraping to effectively retrieve and process website content:

HTML Parsing: Extracts text, links, and other elements from HTML pages using scripts that target specific data patterns.

DOM Parsing: Utilizes the Document Object Model (DOM) to navigate and extract structured data from web pages, enhancing scraping efficiency for dynamically generated content.

Vertical Aggregation: Employs specialized platforms to automate data extraction for specific industry verticals, minimizing manual intervention in data harvesting processes.

XPath: Uses XML Path Language (XPath) to navigate through XML documents, facilitating precise data extraction based on predefined criteria.

Google Sheets Integration: Utilizes Google Sheets' IMPORTXML function to scrape and import data directly into spreadsheets, providing a straightforward method for web data extraction and analysis.

These techniques empower businesses and researchers to harness web data for various purposes, from market analysis to operational optimization. However, they also underscore the importance of ethical considerations and cybersecurity measures in managing and protecting data accessed through scraping activities.

Safeguarding Your Data: 4 Effective Strategies

In today's digital landscape, protecting your valuable information is crucial while maintaining your online presence. Here are four strategies to safeguard your sensitive data:

Limit Requests: Implement rate-limiting rules to control the number of requests from individual IP addresses within a specified timeframe. This prevents excessive pinging of your server, reducing the risk of data scraping attempts.

Apply CAPTCHA: Introduce CAPTCHA challenges for users making multiple requests from the same server. CAPTCHA prompts require human interaction to verify identity, effectively thwarting automated scraping tools that cannot solve these puzzles.

Use Images: Embed sensitive data, such as contact information and pricing details, within images instead of plain text. Web scraping tools are designed to parse text, not images, making it more challenging for automated scripts to extract and misuse your data.

Obfuscate Text: Employ techniques like textual obfuscation (e.g., using "[at]" instead of "@") to confuse data scraping tools. Simple changes in text formatting can significantly hinder scraping efforts and deter unauthorized data extraction.

While complete protection of all data may be challenging, these proactive measures help fortify your sensitive web pages against unauthorized access and misuse. By integrating these strategies into your data protection framework, you can enhance security while maintaining your competitive edge online.

Conclusion

As data continues to play a pivotal role in decision-making and innovation, understanding the nuances of data scraping becomes increasingly crucial. By employing effective techniques, maintaining ethical standards, and implementing robust security measures, businesses can harness the power of web data responsibly. Whether you're leveraging scraping tools for competitive analysis or safeguarding against potential threats, navigating the complexities of data scraping ensures that you stay ahead in an interconnected digital landscape.